dmpop

Aircraft photography, spaghetti code, half-baked thoughts

Guestbook ReadsHow to set up an okay-Docker home server

An older machine running Linux and an Internet connection is all you need to host your applications and data. Sounds simple until you realize that there is so much you need to learn to be able to put all the pieces together and make them work. This guide is here to make the learning curve less steep by walking you through the process of setting up a home server that runs multiple applications in containers and has its own domain name. While working your way through the guide, you'll learn how to perform the following tasks:

- Assign a domain name to a server on a home network that has a dynamic external IP

- Install Docker on the server

- Run a simple application in a Docker container

- Use Docker compose to enable HTTPS for the containerized application

- Add more containers

- Assign a dedicated subdomain to a container

The guide assumes that you have a working knowledge of Linux, you know your way around the command line, and you have a basic understanding of what Docker is and what it does. If Docker and containers are completely unfamiliar territory to you, head over to Docker Curriculum for a primer.

Preliminaries

- A home server is a machine running Debian 12. The server is connected to a home local network through a router. The machine is accessible via SSH.

- The router assigns a permanent internal IP address to the machine.

- The router forwards ports 80 and 443 to the machine.

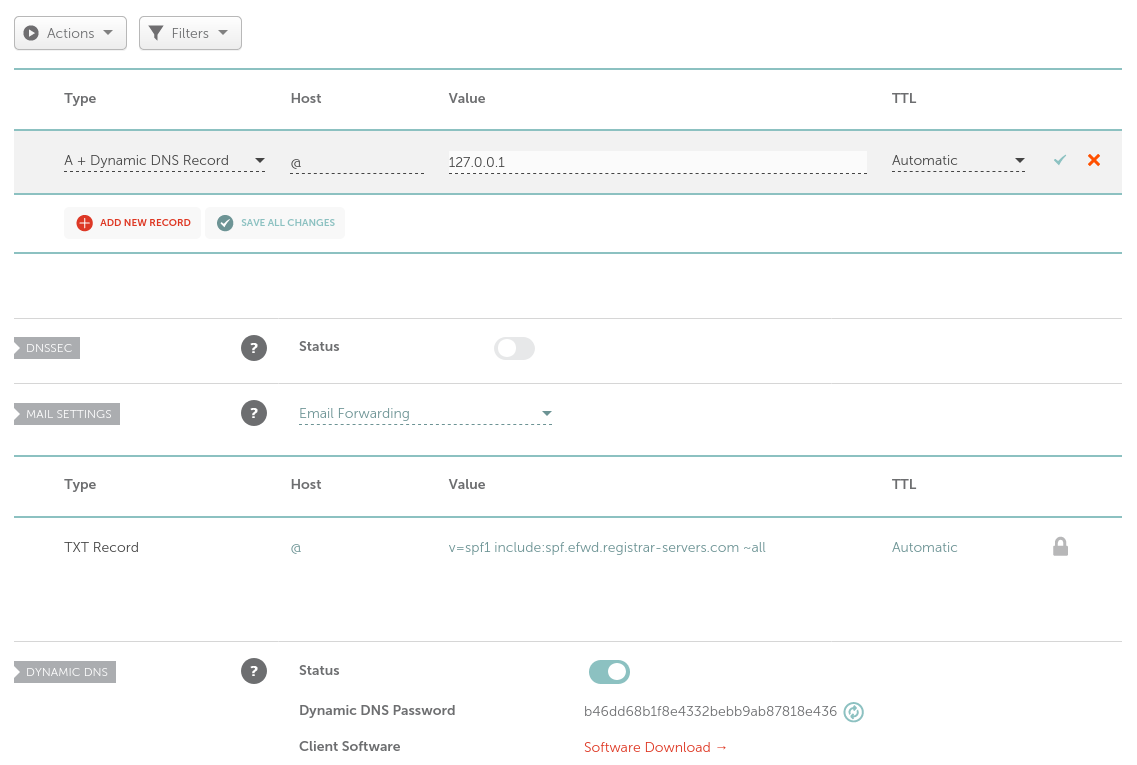

Configuring Dynamic DNS for the domain name

The first step is to get a domain name and assign it to the external IP address assigned to the router by your ISP. For this exercise, we use Namecheap. Normally, linking a domain name to an IP address is a matter of creating an A Record pointing to the IP address. But it's quite likely that your ISP assigns IP addresses dynamically, meaning the external IP changes from time to time.

Assigning the domain name to a dynamic IP address requires configuring Dynamic DNS. Here's how it's done in Namecheap.

- Switch to the Domain List section in the Dashboard.

- Press the Manage button next to the domain name, and switch to the Advanced DNS section.

- Remove all existing records, and create a new one as follows:

- Type: A + Dynamic DNS Record

- Host: @

- Value: 127.0.0.1

- TTL: Automatic

- Make sure to save the new record.

- Enable the Dynamic DNS option, and note the generated password.

Log in to the home server, and install the ddclient tool using the sudo apt install ddclient command. Open the /etc/ddclient.conf file for editing, and add the following configuration:

use=web, web=dynamicdns.park-your-domain.com/getip

protocol=namecheap

server=dynamicdns.park-your-domain.com

login=domain.tld

password=dynamic-dns-password

@.domain.tldReplace domain.tld with the actual domain name, and dynamic-dns-password with the password generated automatically by Namecheap.

Save the changes, and restart ddclient using the sudo systemctl restart ddclient.service command.

Give it some time to do its job. If everything works correctly, the 127.0.0.1 address in the DNS record should be replaced with the current external IP.

Let's containerize everything!

Our home server is going to run everything in Docker containers. This approach makes it easier to deploy and manage hosted applications, as you don't have to worry about dependencies, and there is no need to monkey around with configuration files. There is only one thing you need to do to transform the home server into a container Wundermachine, and that is to install Docker.

Install Docker

Installing Docker on Debian 12 is a matter of logging in to the server via SSH as a regular user and running the following commands:

sudo apt update

sudo apt install apt-transport-https ca-certificates curl gnupg

curl -fsSL https://download.docker.com/linux/debian/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker.gpg] https://download.docker.com/linux/debian bookworm stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo usermod -aG docker ${USER}To keep things tidy, create a dedicated directory for storing all home server files and data. The following command creates the srv directory in your home:

mkdir -p ~/srvAdd finishing touches

Lastly, you need to install Git and enable unattended upgrades. Installing Git is a matter of running the sudo apt install git command. To keep the system up-to-date, enable unattended upgrades:

sudo apt update

sudo apt upgrade

sudo apt install unattended-upgradesRun sudo nano /etc/apt/apt.conf.d/50unattended-upgrades, uncomment the Unattended-Upgrade::Automatic-Reboot "false"; line, and set it to true:

Unattended-Upgrade::Automatic-Reboot "true";Save the changes. Enabling unattended upgrades means that you don't have to keep the home server up-to-date manually — it happens automatically, making sure that the server gets all bug fixes and security patches as soon as they become available.

Your first Docker container

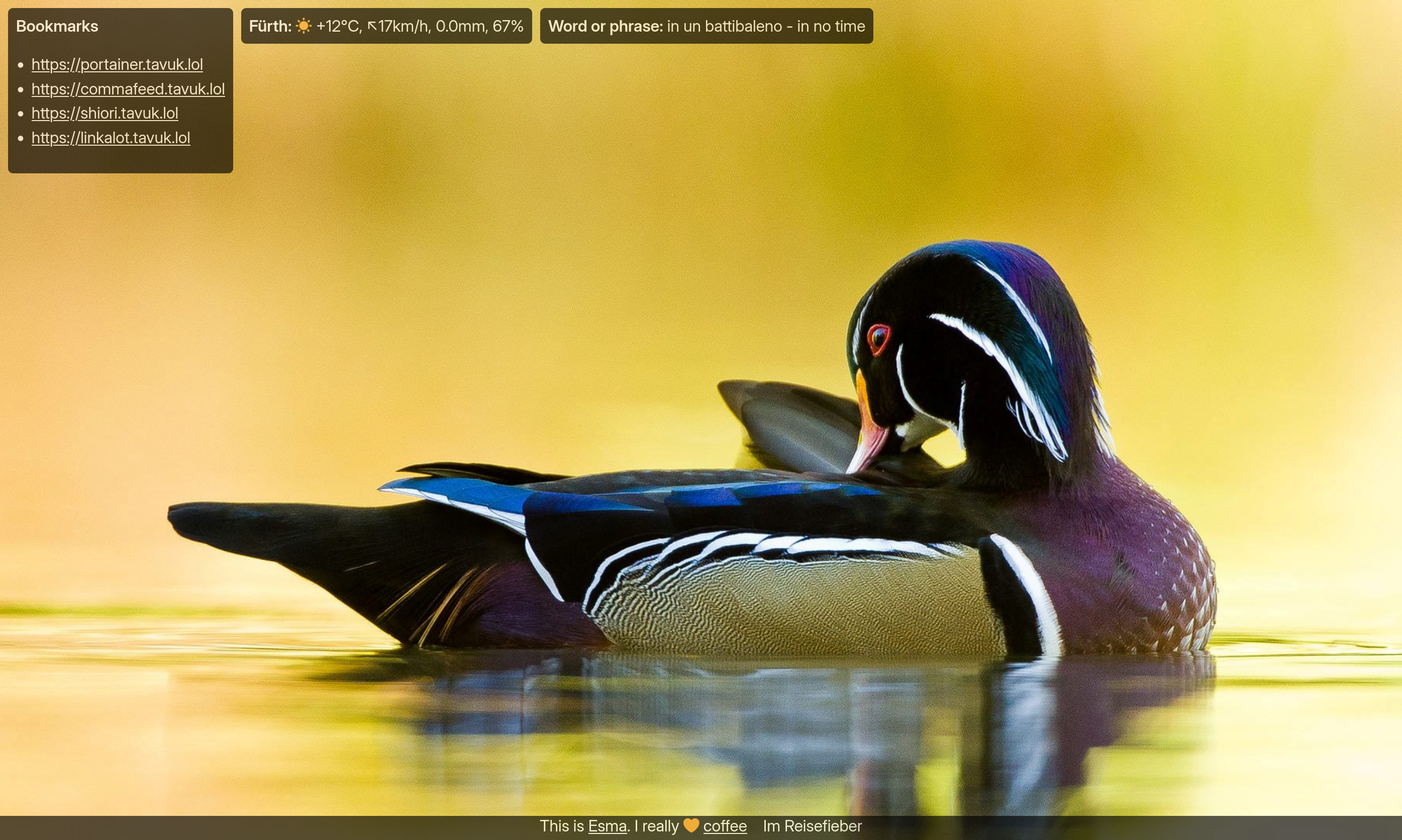

With all the preparatory work out of the way, you can spin up your very first container that serves a simple dashboard. There are plenty of dashboards out there, including Homer, Dashy, Heimdall, to name a few. However, for this project, we're going to use Esma, a simple landing page that shows a daily photo from Bing (or a random photo from your own photo library), current weather conditions in the specified location, a link list, and anything else you might want to add to it. Esma requires very little customization, and it's easy to deploy as a Docker container.

Normally, you'd use Docker to pull a ready-made container image and use it to start a container. But there is no container image for Esma, so you have to build it yourself. Thankfully, the supplied Dockerfile makes it a quick and simple process. Switch to the newly created srv directory, and clone the project's Git repository using the git clone https://codeberg.org/dmpop/esma.git command. Switch to the resulting esma directory, open the index.php file for editing, and modify the $title, $city, and $footer settings. Save the changes. Run the docker build -t esma . command (note the . dot at the end of the command) to build a container image. Use then the docker run command to start a container:

sudo docker run -d --rm -p 8000:8000 --name=esma -v /path/to/data:/usr/src/esma/data esma

Replace /path/to/data with the actual path to the esma/data directory (in this case, you can use $PWD/data as the path). Point the browser to http://domain.tld:8000 (replace domain.tld with the actual domain name of the home server), and you should see the landing page. To add a link list panel, create a bookmarks.txt file with a list of links, and save the file in the esma/data directory. If you want Esma to show your own photos instead of Bing's photo of the day, create a photos directory in esma/data and put photos in there.

Putting containers behind a reverse proxy

Now we have a simple container up and running, but there is still work to be done: we need to enable the HTTPS secure protocol and make the container running Esma accessible on port 80. In other words, we need to replace the http://domain.tld:8000 URL with the secure and more elegant https://domain.tld. To accomplish that, we are going to deploy another container running the Caddy server. This container has two important duties: 1) automatically enable HTTPS, and 2) act as a reverse proxy, whose task is to redirect incoming traffic to the specified destination. In our case, we configure Caddy to route all requests arriving on port 80 to the container running Esma on port 8000.

To make both the Caddy and Esma containers work together, we are going to use Docker Compose, a clever way to define multiple containers and their properties (network settings, volumes, etc.). All of this is done using a docker-compose.yml file that in our case looks as follows:

services:

caddy_reverse_proxy:

image: caddy:latest

restart: unless-stopped

container_name: caddy_proxy

ports:

- 80:80

- 443:443

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile

- caddy_data:/data

- caddy_config:/config

networks:

- caddy_net

esma:

build:

context: ./esma

dockerfile: Dockerfile

restart: unless-stopped

volumes:

- type: bind

source: ./esma/data

target: /usr/src/esma/data

networks:

- caddy_net

volumes:

caddy_data:

caddy_config:

networks:

caddy_net:The services part defines two containers: one container running Caddy as a reverse proxy, and the other one running Esma. The Caddy service pulls the latest container image from the Docker repository (the image: caddy:latest rule), while the build block under the esma service specifies that the container image is built locally using the Dockerfile file (the dockerfile: Dockerfile rule) in the esma sub-directory (the context: ./esma rule).

The ports block reserves ports 80 (for HTTP connections) and 443 (for HTTPS connections) for use with Caddy, while the volumes block defines volumes required by Caddy. The ./Caddyfile:/etc/caddy/Caddyfile rule indicates that the local Caddyfile containing the required Caddy configuration (more on that later) is mapped into the /etc/caddy/Caddyfile file path inside the container, which makes the file available to Caddy.

The volumes block under the esma service looks slightly different. That's because we want to map the local esma/data directory into the /usr/src/esma/data path inside the container running Esma. In other words, when you create a bookmarks.txt file in the local esma/data directory, Esma uses it inside the container.

Finally, the networks rule ensures that both containers run on the same network (caddy_network in this case).

Save the docker-compose.yml file in the srv directory above esma. In the same location, create a Caddyfile file, and specify the following configuration:

{

email <email address>

}

<domain.tld> {

reverse_proxy esma:8000

}Replace <email address> with the email address you want the Let's Encrypt service to use with the SSL certificate it issues for the Caddy server. Replace <domain.tld> with the actual domain name, and save the changes.

Now, start the container bundle using the sudo docker compose up -d command. Point the browser to https://domain.tld, and if everything works correctly, you should be greeted by Esma's landing page.

Adding more containers. Each with its own subdomain

Now that you know how to use docker-compose.yml and Caddyfile files to start Caddy in a container and use it to direct traffic to another container defined in the same docker-compose.yml file, you can add as many containers as you want. Say, you'd like to run your own instance of the CommaFeed RSS aggregator. All you have to do is to add the following container definition in the services section of the docker-compose.yml file:

commafeed:

container_name: commafeed

image: athou/commafeed:latest-h2

restart: unless-stopped

volumes:

- type: bind

source: ./commafeed

target: /commafeed/data

deploy:

resources:

limits:

memory: 256M

networks:

- caddy_netNote that the definition doesn't specify a network port for CommaFeed. This is because instead of running the CommaFeed on a specific port, we're going to assign a dedicated subdomain to it. So instead of accessing the RSS aggregator using a URL like https//domain.tld:8082, you point the browser to https://commafeed.domain.tld. To do that, you need to create another A + Dynamic DNS Record similar to the first one, with one important difference: the Host field should contain the desired subdomain name (for example, commafeed). Next, open the /etc/ddclient.conf file for editing, and add the new subdomain as follows:

@.domain.tld, commafeed.domain.tldSave the changes, close the file, and restart the ddclient service with the sudo systemctl restart ddclient.service command.

Finally, you need to add the following rule to Caddyfile:

commafeed.domain.tld {

reverse_proxy commafeed:8082

}Note that 8082 in the rule above points to a port in the container. If you haven't stopped the previous sudo docker compose up -d command, do it now using sudo docker compose down. Then run sudo docker compose up -d again, and if everything works correctly, you can access CommaFeed by pointing the browser to https://commafeed.domain.tld.

Creating a systemd service

After you run the sudo docker compose up -d command, all the containers will run happily until you reboot the server. Then you need to run the command again. Alternatively, you can create a simple systemd service that automatically starts all Docker services on boot. To do this, create a /etc/systemd/system/home-server.service file, open it for editing, and enter the following configuration (replace USER with the actual user name):

[Unit]

Description=Home server

After=docker.service

Requires=docker.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/bin/bash -c "docker compose -f /home/USER/srv/docker-compose.yml up -d"

ExecStop=/bin/bash -c "docker compose -f /home/USER/srv/docker-compose.yml down"

[Install]

WantedBy=multi-user.targetSave the file, then run the commands below to enable and start the created systemd service:

sudo systemctl enable home-server.service

sudo systemctl start home-server.serviceGoing further

Using the described techniques, you can deploy practically any number of containerized applications. But as the list of containers running on your server grows, so does the effort required to monitor and manage them. At which point, you should consider using dedicated container management tools like Portainer and Watchtower. The former can help you to manage containers using a web-based UI, while the latter is designed to keep container images up-to-date. And once you've done that, you'll discover that there is so much more you can do with your home server. In other words, welcome to the most elaborate rabbit hole that is self-hosting.